Imagine a world where your survey doesn’t just sit there passively, waiting for participants to fill blank boxes. It listens. It nudges. It asks the question you didn’t know you needed, to the consumer who didn’t know they had the answer. And it does so at a scale that means you still get the numbers you can take into the boardroom.

That’s not science fiction; it’s what happens when we let AI do what it’s good at, conversation at scale, while we humans do what we are good at: framing problems, interpreting meaning, and holding the line on truth. At Spark we believe by collaborating with the robots we can create a blended future in research where qualitative depth and quantitative confidence are not opposing forces but runners in the same race, Bolt and Dina Asher-Smith, neck and neck.

Spark has developed a proprietary product to make this a reality – we call it Conversive. I want to explain why conversational quant is becoming essential, where it can go wrong if we’re careless, and how to use it to get closer to what really matters: human truth. Human truth that powers better decisions. Sound like a plan? Good.

If time is tight – the full article will take about 8 minutes to read, there’s a 3 min read-time summary, at the end. So, jump down there if you want to.

The false choice we were sold.

Market research has, for decades, been nudged into a false binary: fast but shallow vs. rich but slow. On one side, large, N quant with the reassuring crispness of significance tests; on the other, the messy brilliance of qual, raw emotion, contradiction, nuance. Any of us experienced marketers and researchers reading this will know that life is about more than ones and zeros.

AI goads us to pick one side again, this time a different binary: “machines will replace moderators” vs. “machines can’t understand people.” Both takes are lazy, in my opinion. The first ignores the social, contextual nature of meaning; the second underestimates how far language models have come at tracking intent, tone, and trajectory in conversation. By trajectory, I mean where we are going, our real feelings on the matter.

The truth is somewhere else. AI can scale the craft of good qualitative probing without discarding the discipline of quant. It can turn a static survey into a responsive conversation, one that listens in real time and follows promising threads, while still delivering robust numbers. We call this Conversive – more on this later (or if you’re the impatient type, skip to the bottom for an intro)

From text boxes to boxes of text to dialogue

Let’s start with the humble open, ended question. It’s the research equivalent of shouting into the wind: “Any other comments?” Participants do their best. Some write essays, some write “N/A,” others write “ajshdkjasd”( those guys are the worst) most write just enough to move on.

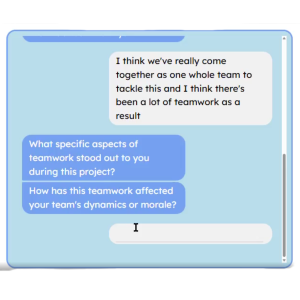

However, genuine insight rarely lives in a single answer, it emerges in the exchange. A good moderator hears a half-formed story, and knows where to go next: “When you say it feels ‘cheap,’ is that the packaging, the price, or something else? Can you tell me about the last time you felt that?” That second or third turn is everything.

AI, used well, allows your survey to do exactly that. It can follow up, sense ambiguity, ask for examples, reflect language back to participants, and probe gently for feelings and trade-offs. Not with gimmicks, but with the curiosity of a good moderator (or a 10-year-old, quite often the same thing in terms of curiosity!). And because it’s embedded in a quant instrument, you’re not trading away sample size or structure. You keep the base sizes, the quotas, the weights, and the tidy crosstabs, as well as the human texture.

Why this matters now.

Three forces make conversational quant more than a nice to have:

- Complexity of decisions

Inflation, new channels, fragmented media, shifting norms, issues that can’t be reduced to a single metric. Decision makers need both a read on the numbers and the reasons behind them. “What’s happening? So what? Now what?” stops being a slide format and becomes an operating rhythm. We have been using What³ for many years in Spark – we don’t do talking shops but increasingly there is a lot more to talk about! - Erosion of signals

People are more media, literate and brand, savvy than ever. They’re also busier. Straight lines between stimulus and response are rare. Subtext, contradiction, and context matter more; static instruments miss them. - AI as an everyday interface

We’re all getting used to speaking to systems in natural language. Participants no longer find chat experiences weird; they find box, ticking more unnatural. Meeting people where they are improves engagement and data quality. We have always been fans of adapting to the participant’s world as opposed to forcing them to adapt to ours – this is just a natural evolution of this.

I hear you, “but won’t the AI make things up?”

A fair question, and one that deserves a serious answer. Yes, large language models can hallucinate when asked to invent facts. In research, we don’t ask them to invent. It’s one of the reasons, as I write this that for most projects synthetic qual is still not good enough – we can bias it in so many ways. So, to do this right we have to constrain AI – yes, back in your box AI, we’re in charge now!

Think of a good AI, moderated flow as a guard railed conversation:

- Bounded purpose: The AI knows the study goals, the brand context, the allowed tone, and the “do not go” zones.

- Deterministic routing: It uses a library of vetted follow, ups aligned to the guide, with dynamic selection based on what the participant just said. Or put simply, we put AI through its paces thousands of times, so it gets us and what we are all about. No waffle, thanks AI.

- Evidence, first summaries: It labels sentiment, extracts themes, and suggests hypotheses, always traceable back to the original verbatims.

- Human oversight: Researchers inspect transcripts, adjust probes, and review outputs. The AI is a tool, not the decider.

- Real not synthetic. While we use AI to probe and stimulate conversation, all the answers are from real people, lots of them. There’s no ‘Made up’ content.

Done this way, AI doesn’t replace judgement; it concentrates it, freeing humans from the mechanical labour of chasing clarifications across hundreds of responses, so we can spend more time on sense, making and implications.

Understanding at scale (without cosplay)

There’s a risk, when we talk about “human, like” chat, of becoming performative: teaching a machine to cosplay well, being human. That’s not the point. The point is pragmatic empathy, questions that help people tell truer stories about themselves and their choices.

For example:

- Mirror their language (“You said it feels ‘faffy,’ what part is the faff?”).

- Ask for the last time, not the ideal time (“When did this last happen?”).

- Invite contradiction (“Does that ever clash with the way you actually shop?”).

- Probe consequences (“If that claim were removed, what would you do instead?”).

These are moderator moves that surface motivations, trade, offs and social context, without a one-hour depth interview. The AI doesn’t need to “feel” anything; it needs to behave in ways that help participants express themselves. It needs to think like a Sparkie.

The numbers still matter.

Let’s be very clear: leaders still need quantitative confidence. Sample size, quotas, weighting, significance, none of that goes away. The blended approach keeps the spine of a well-built quant study. It just adds responsive depth at each relevant point.

This is crucial for three reasons:

- Prioritisation: You can size which themes are prevalent, and which belong to passionate minorities.

- Segmentation: You can map emotions, needs and language to segments or cohorts, not just to “people in general.” Gen Z’s thought this.

- Actionability: You can put effect sizes next to stories. “A third of light buyers felt X for reason Y; here’s how that changes when we do Z.” AND here’s what they had to say about it!

Where standard open ends give you a bag of quotes to cherry, pick, conversational quant gives you structured depth: sentiments and themes that can be cross tabulated like any other variable.

Culture and language are not afterthoughts.

One of the most exciting shifts in this space is the ability to run natively across languages without flattening culture. This isn’t a one, button translation trick; it’s about cultural nuance baked in:

- Recognising idioms and local metaphors.

- Respecting politeness norms and intensity.

- Adjusting probes to avoid leading or offending.

- Summarising findings in English (or any target language) while preserving the flavour of the original.

When you’re testing ideas across markets, this matters. You want your decision deck to be in one language; you want your conversations to be in many.

What changes for researchers

If surveys can talk back, the researcher’s role evolves in three ways:

- Designer of dialogue

We aren’t just writing questionnaires; we’re designing conversational systems. That includes tone, escalation rules, “if, this, then, probe” logic, and stop conditions. It’s closer to crafting a discussion guide, at scale. We need to set the guard rails for the machine; that takes an experienced researcher. - Curator of truth

We define what “good” looks like in the outputs: how themes are clustered, how emotion is scored, how uncertainty is flagged. We decide which patterns are interesting, and which are noise. - Strategic interpreter

We bring the story together: context from the brand, signals from culture, counterfactuals, and the operational reality of the client. AI helps us get more raw material, faster; it does not absolve us of the burden of judgement.

This shift is energising – no really! It’s new research, in a way that some of us researchers who have been around for a while haven’t seen in a long time. It moves our effort away from administration and towards insight craft. If you are truly curious then this is the time to unleash your inner curious George and really enjoy the changes.

A day in the life of conversational quant (a practical illustration)

A food brand wants to understand how a new on, pack claim lands: “Natural Energy, No Crash.” The quant skeleton covers awareness, comprehension, purchase intent, and claim preference across n=1000 shoppers with nationally representative quotas. Embedded at three points are conversational probes, alongside the quant:

- Meaning, making

After comprehension, the survey asks: “In your own words, what does ‘No Crash’ mean for you?”. If someone says, “No sugar crash,” it follows with: “Tell me about the last time you felt a sugar crash, what happened?”. If another says, “Marketing fluff,” it probes: “What made you think that? Past experience, or something about the wording?” - Trade, off framing

After a shelf mock up choice task, it asks: “You picked Variant B. Which part had the biggest influence? If you could change one word, which would it be and why?” - Emotional residue

Near the end, it asks: “Think of a time in your day when this would help or hinder. What’s that moment?” Then it gently nudges for specifics (place, people, feeling).

Outputs show that “No Crash” resonates with time, pressured parents for late afternoon snacks but triggers scepticism among gym, goers who’ve been burned by some high energy drinks before. The quantified read sizes these groups; the conversational themes tell you why, and how to reframe. The team walks away not just with a tick (or cross) against a claim, but with language to adopt, moments to target, and risks to mitigate. As a client, you can have your cake and eat it. Or in this case have your high energy drink and down it.

Guardrails that matter (ethics isn’t optional)

With great power comes… well, you know. Spark is an MRS partner company and as such we recently attended their Ethics in AI training. If we’re serious about human truth, we need to be serious about how we collect and use it.

- Consent and clarity: People must know they’re chatting with an AI system inside a survey, and what happens to their words. No theatre, no deception.

- Respectful pacing: Don’t badger. Allow “skip,” “prefer not to say,” and gentle exits without penalty.

- Bias vigilance: Monitor which voices are amplified or muffled. Audit prompts for leading language. Stress test on edge cases. This is one of the issues we will see when the data is synthetic – a lot less so with real people.

- Data minimisation: Only collect what you need; keep it secure; delete responsibly.

Ethics is not a slide at the back; it’s part of the product.

For the sceptics: show me the value.

Leadership cares about outcomes: speed, cost, quality, and impact.

- Speed: Qual depths plus Quant survey or run a Conversive with Spark – you’ll save days!

- Cost: You redirect spend from lengthy interviews to large, conversational surveys, reserving specialist qual for the hardest problems or perhaps when a more tactile approach is needed e.g. testing NPD.

- Quality: Engagement goes up; satisficing goes down. You get fewer single, word answers and more grounded stories, with fewer contradictions.

- Impact – the big one: Findings land better. Stakeholders hear the voice of customers and see the numbers, making it easier to commit to action.

Where this goes next

Today’s conversational quant lives inside surveys. Tomorrow, it links across ecosystems:

- Pre and post, exposure chats around digital media.

- Post, purchase probes that surface friction moments and route to product teams.

- Segment “avatars” trained on hundreds of thousands of data points, available to pressure, test ideas in plain English (I say next – but we have that already at Spark, we call it Personify. If you have a segmentation and want to know more then get in touch)

A quiet revolution, not a noisy one

The best research innovations don’t draw attention to themselves. They remove friction. Participants feel oddly seen; researchers feel oddly calm; clients feel oddly confident. The magic isn’t in a flashy interface. It’s in the work: in better, designed probes, clearer decision logic, and outputs that honour both the head and the heart.

At Spark, we’ve been building and refining this approach, embedding responsive, AI, led chats directly into robust quant surveys, because it helps us do what we’ve always tried to do: get closer to human truth and make it useful. It’s not a gimmick. It’s a new baseline.

If your current toolkit feels like it forces you to choose between speed and soul, consider adding conversation to your quant. Let the survey talk back. You might be surprised how much people will tell you if you simply ask the next question.

Final thought: technology in service of truth

There’s a line I keep coming back to: machines should make us more human, not less. In research, that means using AI to remove the dullness and the drudgery, to invite better stories, and to give us the time and space to listen. It means resisting the urge to automate the parts of our job that are the job, judgement, ethics, imagination, and automating the parts that slow those down.

Put differently: AI isn’t here to replace your moderator. It’s here to make every researcher a better moderator, and every decision a little more grounded in the rich, contradictory, wonderfully human reality of how people actually live, choose and change.

Conversive is Spark’s newest, proprietary, AI, powered research solution, the practical expression of everything in this article. It turns static surveys into responsive, human, like dialogues, so you get qualitative richness with quantitative confidence in a single instrument. Conversive leads to an average +136% increase in word count and an average +30% in quality of those responses.

What Conversive Does

- Embeds conversation inside quant: No extra platforms. Your survey includes a guided, AI, moderated exchange at scale.

- Asks the “next right question”: Dynamic probes adapt to each answer to uncover motivations, emotions, and friction points, without adding respondent fatigue.

- Works across 30+ languages: Cultural nuance preserved; outputs combined for decision, making.

- Keeps humans in the loop: Guardrails, traceability to verbatims, and researcher oversight ensure quality, ethics, and relevance.

What you get

- Board, ready numbers (sizing, significance, priorities) and board, changing stories (why it matters, where it breaks, how to fix).

- Faster cycles, higher engagement, fewer “N/A” answers, and debriefs that move from debate to decision.

- A single, integrated report blending quant clarity with qual depth, including sentiment mapping, emotional analysis, and thematic exploration.

If you’re ready to let your surveys talk back and finally retire the false choice between fast and deep, Conversive is how you do it. Get in touch and let’s see if Conversive can work for you.

10-Point Summary: When the Survey Talks Back (3 Min Read)

- The Vision: Conversational Quantitative Research

Traditional surveys are passive; they collect answers but rarely probe deeper.

Spark Market Research’s “Conversive” approach uses AI to turn static surveys into dynamic conversations, blending the depth of qualitative research with the scale and rigour of quantitative methods.

- Breaking False Binaries

Market research has long been split between “fast but shallow” quantitative and “rich but slow” qualitative approaches.

AI introduces a new binary: “machines will replace moderators” vs. “machines can’t understand people.” The author argues both are simplistic.

The real opportunity is for AI to scale good qualitative probing, making surveys responsive and insightful without sacrificing sample size or statistical confidence.

- How Conversive Works

AI-powered surveys can follow up, clarify, and probe for deeper meaning, much like a skilled human moderator.

This approach keeps the structure and reliability of quant research while adding human texture and nuance.

- Why Now? Three Driving Forces

Complexity of Decisions: Modern business challenges require both numbers and the stories behind them. Erosion of Signals: People are more media-savvy and harder to read; context and contradiction matter. AI as Everyday Interface: Natural language interactions are now normal, making conversational surveys more engaging and effective.

- Guardrails and Ethics

AI in research must be carefully constrained: clear study aims, vetted follow-ups, evidence-based summaries, and human oversight.

Ethics are paramount: transparency, respectful pacing, bias monitoring, and data minimisation are essential.

- Practical Illustration

Example: A food brand evaluates a new claim (“Natural Energy, No Crash”) using Conversive. The survey adapts to responses, probes for real-life experiences, and uncovers nuanced insights about different consumer segments. The result: actionable findings that combine robust numbers with rich stories.

- The Researcher’s Evolving Role

Researchers become designers of dialogue, curators of truth, and strategic interpreters.

AI frees them from administrative tasks, allowing more focus on insight and meaning.

- Outcomes and Value

Conversive delivers speed, cost savings, higher engagement, and more impactful findings.

It enables board-ready numbers and board-changing stories, blending quant clarity with qual depth.

Conversive leads to an average +136% increase in word count and an average +30% in quality of those responses.

- Looking Ahead

Conversive is just the beginning; future research will link conversational insights across customer journeys and segments.

The goal is not to make machines “human,” but to use technology to help humans understand each other better.

- Final Thought

AI should make research more human, not less. It’s a tool to enhance judgement, ethics, and imagination—not replace them.

Key Takeaway

Conversive represents a quiet revolution in market research, using AI to create responsive, ethical, and deeply insightful surveys. It bridges the gap between speed and depth, helping decision-makers get closer to “human truth” at scale.